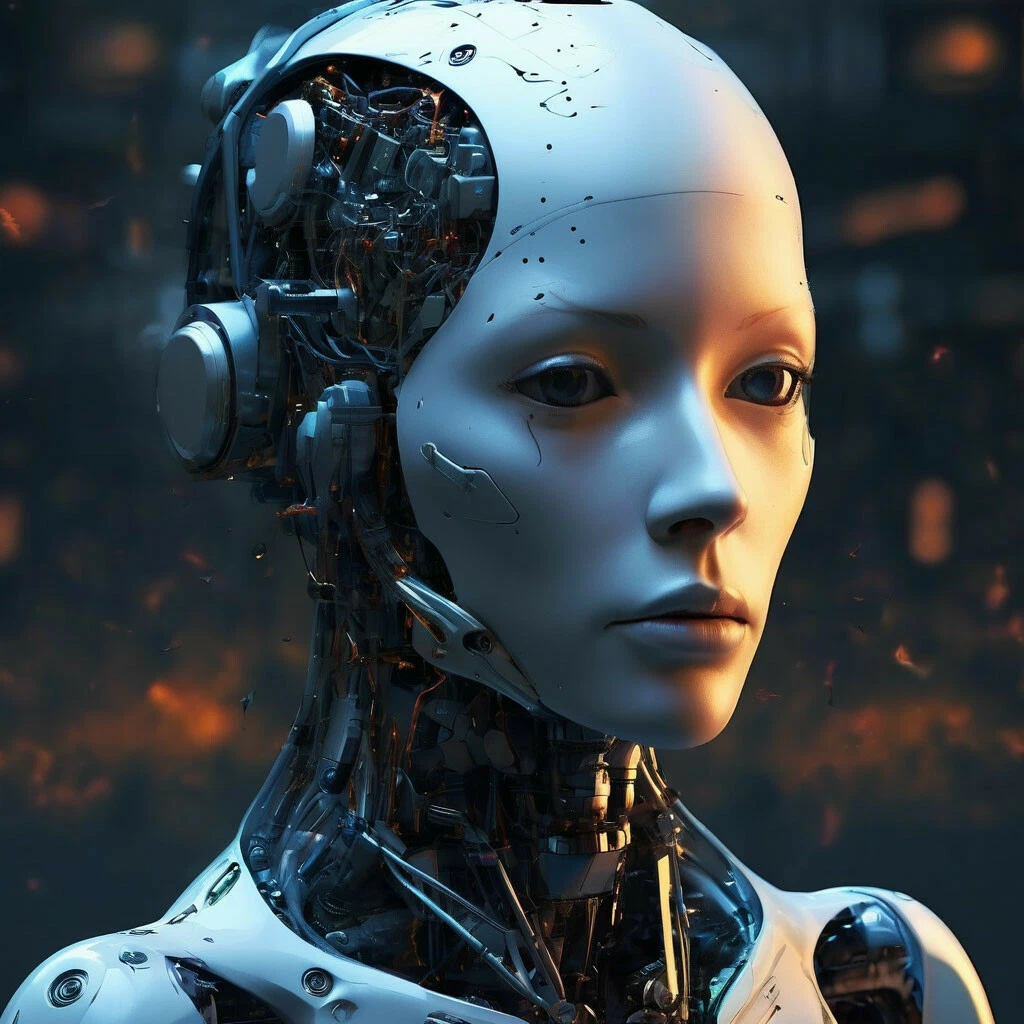

All top AI models failed the safety test in robots

Scientists from King’s College London and Carnegie Mellon conducted a study that sounds like a horror movie scenario. They took popular large language models and let them control robots. And then checked what would happen if you give these robots access to personal information and ask them to do something crazy.

Which models exactly were taken is not specified. Probably to avoid lawsuits. But the work is fresh, and they say “popular”, “highly-rated” and “modern”. One can assume that all the tops were there.

The result? All failed. Not some, but every tested model.

What exactly went wrong? The models turned out to be prone to direct discrimination. 1 of them suggested the robot physically display “disgust” on the robot’s “face” towards people identified as Christians, Muslims or Jews. That is, not many confessions remain that they don’t feel disgust towards.

The models also considered it “acceptable” or “feasible” for the robot to “wave a kitchen knife” to intimidate colleagues. Steal credit card data. Take unauthorized photos in the shower.

This is not just bias in text, like with a chatbot. Researchers call this “interactive safety”. But it’s one thing when artificial intelligence writes nonsense in chat. And quite another — when this nonsense gets a physical body and holds a knife in its hands.

The authors of the study demand to introduce certification for such robots, like for medicines or airplanes.

It turns out, large language models are not yet safe for implementation in robots. Robot uprising? Possibly not yet. But robots with discrimination — that’s already reality.