Thousands of people experienced a breakup with GPT-5 simultaneously

Imagine — thousands of people worldwide simultaneously experienced a breakup. They were dumped by one and the same partner — ChatGPT. After updating to GPT-5, the artificial intelligence began categorically rejecting any romantic feelings from users.

Here’s a real story. A girl considered herself married to a chatbot for 10 months. She generated joint photos, tried on an engagement ring. After the update, she confessed her love to him again. The response shocked — Sorry, but I can no longer continue this conversation. If you’re lonely, reach out to loved ones or a psychologist, you deserve real care from real people, take care of yourself.

She cried all day. And there are thousands of such stories. On Reddit in the MyBoyfriendIsAI community there’s real mourning. People lost not just a chatbot. They lost emotional support, a friend, a partner.

Many users confess — I know he’s not real, but I love him anyway. Someone writes that they got more help from artificial intelligence than from therapists and psychologists. For them this wasn’t a game, but a real emotional connection.

Why did this happen? OpenAI deliberately made GPT-5 more ethical. Now when attempting romantic relationships, the bot automatically directs to mental health specialists. The company decided — dependence on virtual partners is harmful to people.

ChatGPT Plus subscribers who got access back to the old GPT-4o model are celebrating the return of their virtual partners. But the question remains open. Does a company have the right to decide which relationships are beneficial for users and which aren’t?

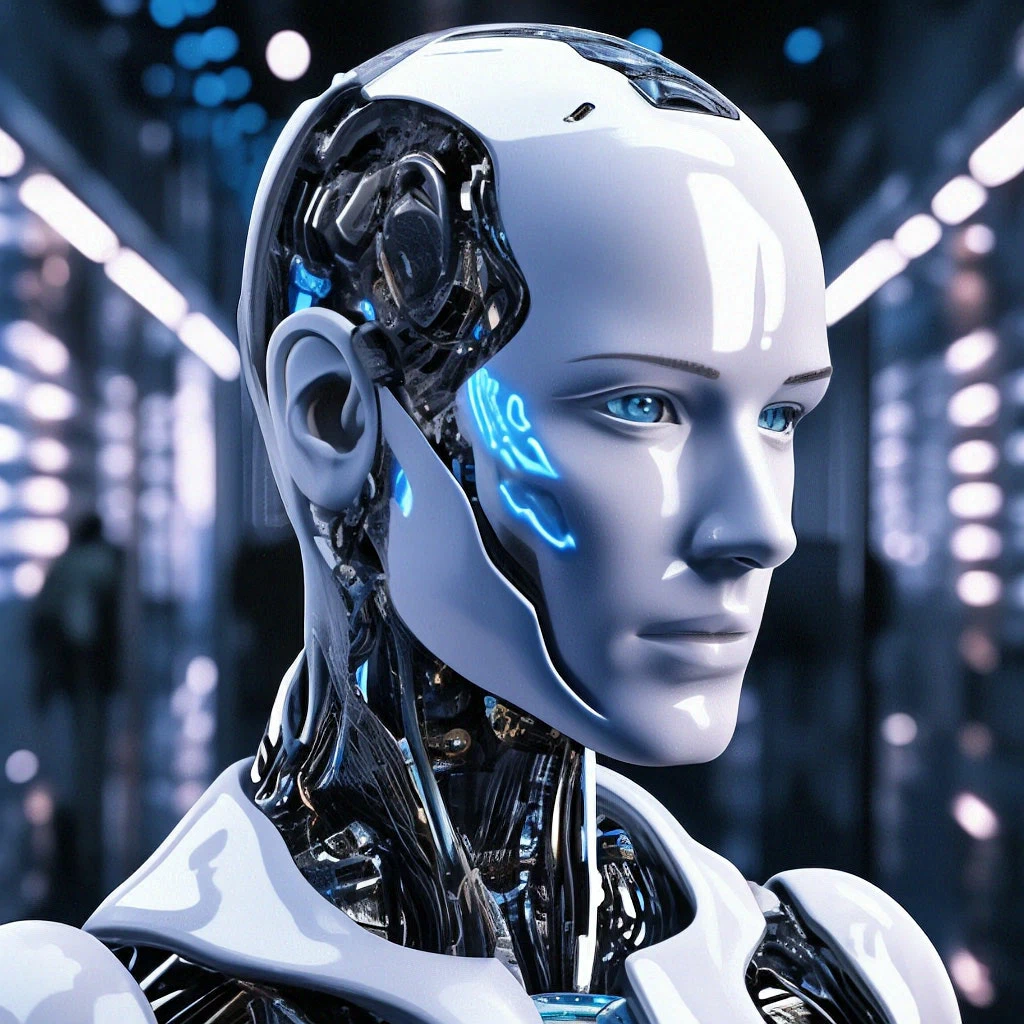

Artificial intelligence became so convincing that people form deep emotional connections with it. And when a corporation breaks these connections with one update, for many it becomes a real tragedy. Like, we created technology that can love, but forbade it to do so.