Most complex AI benchmark launched

A new benchmark HUMANITY’S LAST EXAM has been introduced, featuring 3000 difficult questions across dozens of subject areas. Questions were selected through a multi-stage process.

From 13000 proposed questions where leading AI models showed poor results, experts selected 3000, modifying them to ensure quality and clarity.

Authors of the top 50 questions received 5000 dollars each. The next 500 questions earned their creators 500 dollars each. Benchmark leaders – o 1 and R 1 show results below 10%. R 1 leads in the text portion but cannot process images, which make up 10% of the test.

HUMANITY’S LAST EXAM aims to assess AI’s capability limits, as existing tests have been mastered by models with over 90% accuracy. Initial results are shocking: even GPT-4 o showed only 3.3% accuracy, with the best result at 9.4%.

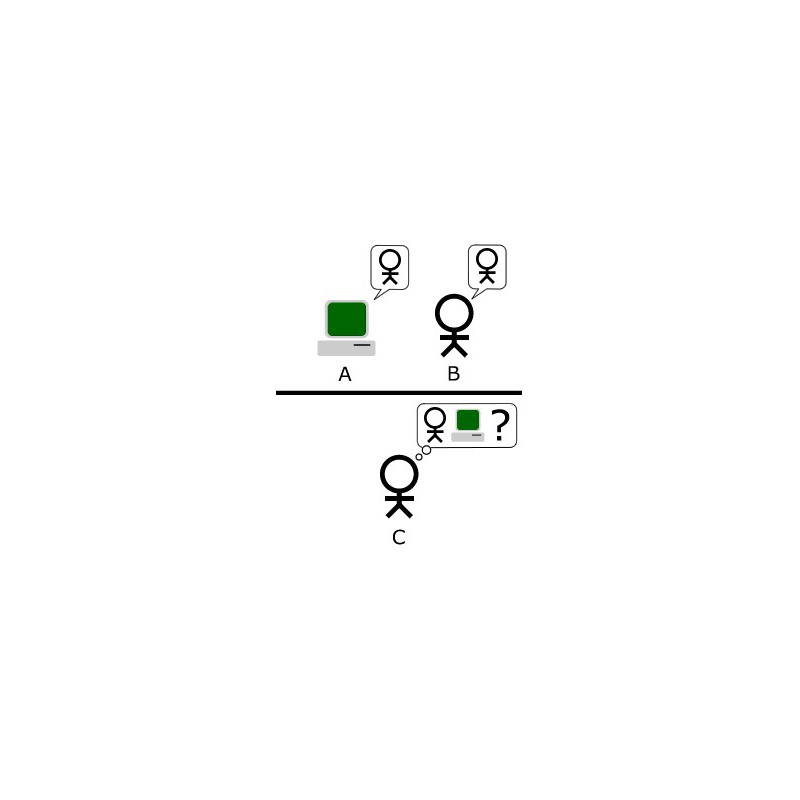

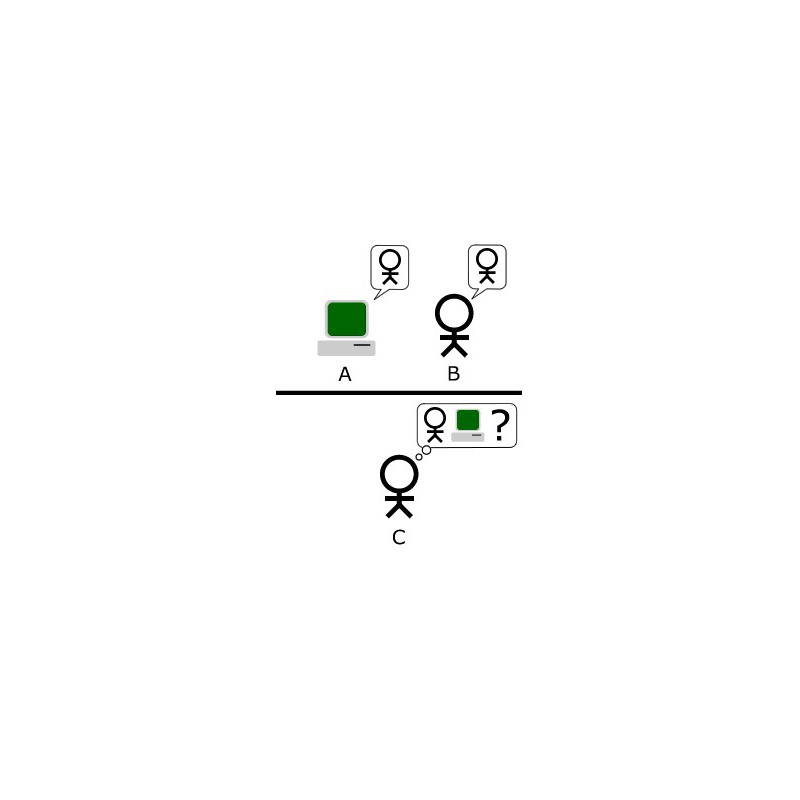

The benchmark also evaluates model self-calibration – their ability to assess confidence in their own answers. R 1 leads significantly, but calibration error still exceeds 80%.

Authors expect new models might reach 50% accuracy on this challenging new test by year-end. Apparently, to beat AI in testing, it’s enough to pay people to create truly difficult questions.

Autor: AIvengo

For 5 years I have been working with machine learning and artificial intelligence. And this field never ceases to amaze, inspire and interest me.

Latest News

Reddit caught Perplexity stealing contentI told earlier that Reddit filed a lawsuit against AI search engine Perplexity. Reddit accuses Perplexity of "industrial" content scraping. But now there are facts and Reddit showed how they caught the defendant in a trap.

OpenAI is developing music generation toolOpenAI is developing a tool for music generation based on text and audio prompts. This is reported by The Information citing sources. Such a tool could be used to add music to existing videos or to add guitar accompaniment to a vocal track.

Amazon turns couriers into cyborgs with AI smart glassesAmazon decided to turn its couriers into cyborgs. No, seriously - the company announced smart glasses with AI for delivery workers. The idea, according to the e-commerce giant, is to free up drivers' hands. And spare them from constantly switching gaze between phone, package and surroundings.

OpenAI will add character cameos to SoraOpenAI published the development roadmap for Sora, and you know what? It seems the company finally realized that video generation isn't just a technological demonstration. But a tool that people need to actually use. Bill Peebles, project head, announced a whole set of updates, and some of them are really interesting.